The robots.txt file is used by website owners to give instructions about their site to web robots. The web robot will read the robots.txt file before visiting a site, to check whether any directories should be excluded from the visit.

In the example file below, the 'User-agent' is specifying ALL robots, and 'Disallow' is instructing them not to visit the /customers directory.

User-agent: * Disallow: /customers |

Customer Self Service allows for easy maintenance of the robots.txt file by site Administrators. Simply follow the procedure below.

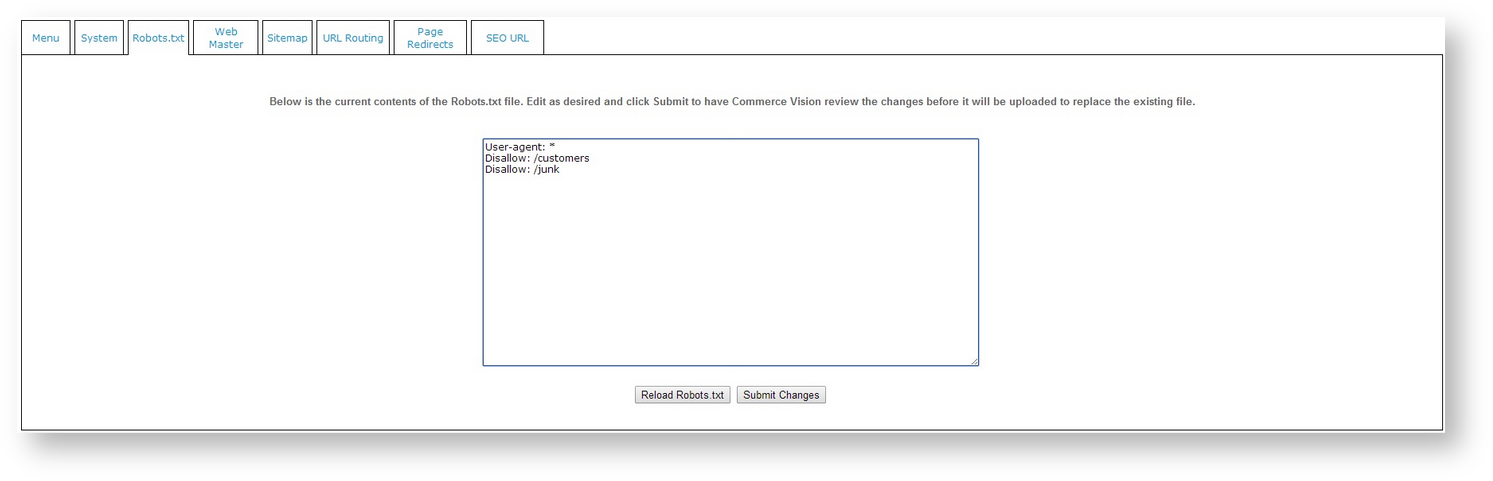

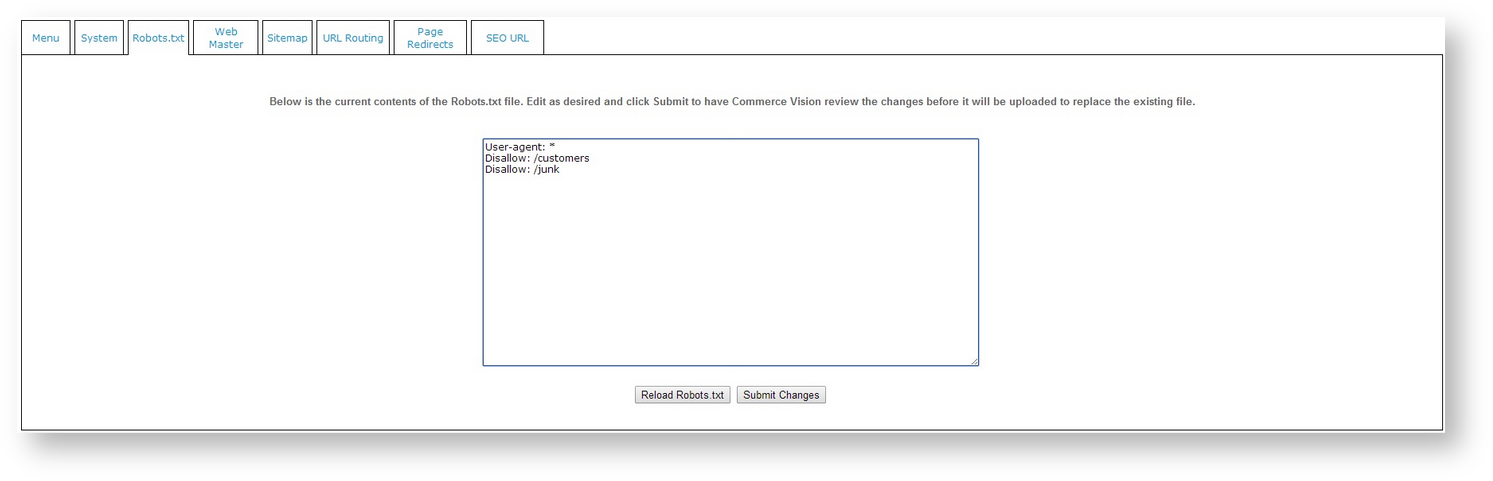

To View and Update the robots.txt file:

- Login as an Administrator.

- Navigate to Settings → SEO Maintenance (/zSearchEngineOptimisationMaintenance.aspx).

- Click the Robots.txt tab.

- The contents of the current robots.txt file will be displayed in the dialogue box.

- Edit the file contents directly on screen, or copy and paste data from another file.

- In the event of an error, click the Reload Robots.txt button to start again.

- Click the Submit Changes button.

The revised file will be sent to Commerce Vision for review, and you will be advised once the new file has been uploaded.

For further information on robots.txt, see http://www.robotstxt.org/robotstxt.html. |

Related articles appear here based on the labels you select. Click to edit the macro and add or change labels.